Here you can find some relevant projects in which I am or have been involved.

Currently Funded Projects as PI

|

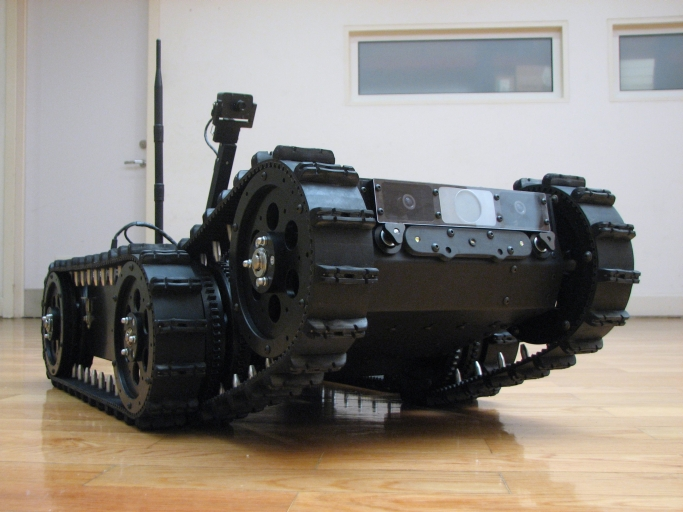

SIAR: Sewer Inspection Autonomous Robot (ECHORD++)

The SIAR project will develop a fully autonomous ground robot able to autonomously navigate and inspect the sewage system with a minimal human intervention, and with the possibility of manually controlling the vehicle or the sensor payload when required.

The project uses as starting point IDMind's robot platform RaposaNG. A new robot will be built based on this know-how, with the following key steps beyond the state of the art required to properly address the challenge: a robust IP67 robot frame designed to work in the hardest environmental conditions with increased power autonomy and flexible inspection capabilities; robust and increased communication capabilities; onboard autonomous navigation and inspection capabilities; usability and cost effectiveness of the developed solution.

|

|

TERESA: Telepresence Reinforcement-Learning Social Agent

TERESA aims to develop a telepresence robot of unprecedented social intelligence, thereby helping to pave the way for the deployment of robots in settings such as homes, schools, and hospitals that require substantial human interaction. In telepresence systems, a human controller remotely interacts with people by guiding a remotely located robot, allowing the controller to be more physically present than with standard teleconferencing. We will develop a new telepresence system that frees the controller from low-level decisions regarding navigation and body pose in social settings. Instead, TERESA will have the social intelligence to perform these functions automatically.

|

OCELLIMAV: New sensing and navigation systems based on Drosophilas OCELLI for Micro Aerial Vehicles

Micro unmanned Aerial Vehicles (MAVs) may open up a new plethora of applications for aerial robotics, both in indoor and outdoors scenarios. However,

the limited payload of these vehicles limits the sensors and processing power that can be carried by MAVs, and, thus, the level of autonomy they can

achieve without relying on external sensing and processing. Flying insects, like Drosophila, on the other hand, can carry out impressive maneuvers with

a relatively small neural system. This project will explore the biological fundamentals of Drosophilas ocelli sensory-motor system, one of the mechanisms

most likely related to fly stabilization, and the possibility to derive new sensing and navigation systems for MAVs from it.

|

|

PAIS-MultiRobot: Perception and Action under Uncertainties in Multi-Robot SystemsThe project main objective is the development of efficient methods for dealing with uncertainties in robotic systems, and, in particular, teams of multiple robots. One of the objectives is the development of an scalable cooperative perception system able to reason on the uncertainties associated to the sensing system in the multi-robot platform. The efficient application of online Partially Observable Markov Decision Processes (POMDPs) to this problem will be analyzed. In the project, we aim to demonstrate the in real robotic systems for applications like surveillance.

|

Other Current Projects

|

EC-SAFEMOBIL: Estimation and control for safe wireless high mobility cooperative industrial systemsAutonomous systems and unmanned aerial vehicles (UAVs), can play an important role in many applications including disaster management, and the monitoring and measurement of events, such as the volcano ash cloud of April 2010. Currently, many missions cannot be accomplished or involve a high level of risk for the people involved (pilots and drivers), as unmanned vehicles are not available or not permitted. This also applies to search and rescue missions, particularly in stormy conditions, where pilots need to risk their lives. These missions could be performed or facilitated by using autonomous helicopters with accurate positioning and the ability to land on mobile platforms such as ship decks. These applications strongly depend on the UAV reliability to react in a predictable and controllable manner in spite of perturbations, such as wind gusts. On the other hand, the cooperation, coordination and traffic control of many mobile entities are relevant issues for applications such as automation of industrial warehousing, surveillance by using aerial and ground vehicles, and transportation systems. EC-SAFEMOBIL is devoted to the development of sufficiently accurate common motion estimation and control methods and technologies in order to reach levels of reliability and safety to facilitate unmanned vehicle deployment in a broad range of applications. It also includes the development of a secure architecture and the middleware to support the implementation. Two different kind of applications are included in the project:

|

Some Past Projects

|

FROG: Fun Robotic Outdoor Guide

FROG proposes to develop a guide robot with a winning personality and behaviours that will engage tourists in a fun exploration of outdoor attractions. The work encompasses innovation in the areas of vision-based detection, robotics design and navigation, human-robot interaction, affective computing, intelligent agent architecture and dependable autonomous outdoor robot operation.

|

|

CONET: Cooperating Objects Network of Excelence

The vision of Cooperating Objects is relatively new and needs to be understood in more detail and extended with inputs from the relevant individual communities that compose it. This will enable us to better understand the impact on the research landscape and to steer the available resources in a meaningful way.

|

|

URUS: Ubiquitous networking Robotics in Urban Settings

The URUS project focussed in designing a network of robots that in a cooperative way interact with human beings and the environment for tasks of assistance, transportation of goods, and surveillance in urban areas. Specifically, the objective of the project was to design and develop a cognitive network robot architecture that integrates cooperating urban robots, intelligent sensors, intelligent devices and communications.

|

|

COMETS: Real-Time Coordination and Control of Multiple Heterogeneous Unmanned Aerial Vehicles

The main objective of COMETS is to design and implement a distributed control system for cooperative activities using heterogeneous Unmanned Aerial Vehicles (UAVs). Particularly, both helicopters and airships are included.

A key aspect in this project is the experimentation: local UAV experiments and general multi-UAV demonstrations. I was directly involved in the project, where I mainly developed my thesis.

|

|

SPREAD:

SPREAD provides a framework for the development and implementation of an integrated forest fire management system for Europe. It will develop an end-to-end solution with inputs from Earth observation and meteorological data, information on the human dimension of fire risk, and assimilation of these data in fire prevention and fire behaviour models.

|