|

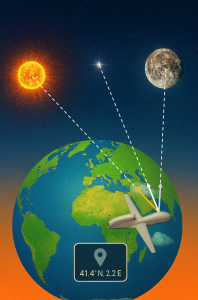

SSM-Track: Star, Sun & Moon Tracker– |

The Star, Sun & Moon Tracker project focuses on developing an advanced software solution for the localization of drones using visual cues from celestial bodies—stars, the Sun, and the Moon—as alternatives to traditional GNSS systems. The approach is particularly suited for applications in which GPS signals are vulnerable to critical interferences such as spoofing or jamming, providing a resilient and independent means of navigation. Drawing inspiration from classical celestial navigation methods used in maritime exploration, the project translates these principles into a modern context for unmanned aerial vehicles (UAVs), reliable geolocation through onboard visual sensing and celestial modeling even under GNSS-compromised conditions. The system developed, while tested in serial vehicles, could be extended for its application in the spatial segment. The Star, Sun & Moon Tracker project focuses on developing an advanced software solution for the localization of drones using visual cues from celestial bodies—stars, the Sun, and the Moon—as alternatives to traditional GNSS systems. The approach is particularly suited for applications in which GPS signals are vulnerable to critical interferences such as spoofing or jamming, providing a resilient and independent means of navigation. Drawing inspiration from classical celestial navigation methods used in maritime exploration, the project translates these principles into a modern context for unmanned aerial vehicles (UAVs), reliable geolocation through onboard visual sensing and celestial modeling even under GNSS-compromised conditions. The system developed, while tested in serial vehicles, could be extended for its application in the spatial segment.Technically, the system comprises three independent but complementary modules: a star and moon tracker for night-time localization, and sun trackers for day operations. Each module is designed to extract the position of the corresponding celestial body from camera imagery, using precise timestamping and attitude/altitude metadata. The star tracker detects and identifies stellar patterns to compute the UAV’s absolute location by matching star constellations with astronomical databases. Meanwhile, the sun and moon trackers estimate directional vectors convert them into normalized vectors relative to the drone’s reference frame.

|

|

|

HuNavSim 2.0. A HUMAN NAVIGATION SIMULATOR FOR BENCHMARKING HUMAN-AWARE ROBOT NAVIGATION. (euROBIN_20C_2)– |

| The goal of HuNavSim is to facilitate the development and evaluation of human-aware robot navigation systems by mean of a novel open-source tool for the simulation of realistic human navigation behaviors in scenarios with mobile robots. The evaluation of the robot skills to navigate in scenarios shared with humans is essential to envisage new service robots working along with people. The development of such mobile social robots poses several challenges: real experimentation with people is costly and difficult to perform and to replicate except for very limited, controlled scenarios. Current robotics simulators include simulated moving people with very limited behavior. Therefore, simulating more realistic human navigation behaviors is necessary for the development of robot navigation techniques. Such a tool can, therefore, impact the whole mobile robotics ecosystem. And secondly, the evaluation of robot navigation among persons not only requires the assessment of its efficiency, but also the safety and comfort of the people. Thus, the tool develped under this project will include a thorough and suitable set of metrics for human-aware navigation.

|

|

|

PICRAH4.0 (PLEC2023-010353. PLATAFORMA INTELIGENTE Y CYBERSEGURA PARA OPTIMIZACIÓN ADAPTATIVA EN LA OPERACIÓN SIMULTANEA DE ROBOTS AUTONOMOS HETEROGENEOS)– |

| The project ‘Intelligent and Cybersecure Platform for adaptive optimization in the simultaneous operation of Heterogeneous Autonomous Robots’ (PICRAH4.0) aims to remotely manage the operation of multiple and heterogeneous robots, coordinating them in a way that generates a dynamic and collaborative ecosystem that allows greater productivity and energy efficiency. It has been one of the projects selected in the TransMisiones 2023 call, an initiative of the State Research Agency (AEI) and the Center for Technological Development and Innovation (CDTI) to promote R+D consortia. Service Robotics Lab of Pablo de Olavide University will lead the human-machine connection, where interaction interfaces based on natural language and large language models are created, as well as collaborative robotics techniques. It will also participate in the deployment of Intelligent Robotic Control, based on machine learning and artificial intelligence, leading the development of intelligent, coordinated and interoperable navigation algorithms. PICRAH4.0 will allow interoperability, not only of robotic platforms, but also of other assets present in production and logistics infrastructures, including human operators, through natural language, extending the full 4.0 concept to the sphere of mobility and autonomous robotics. Through the application of different techniques, approaches and AI algorithms, PICRAH4.0 will securely integrate all the robotic and logistic processes identified in a production scenario, connecting them in a way that generates a more productive and efficient dynamic and collaborative ecosystem.

|

|

|

RATEC (Planificación y localización de robots aéreo+terrestre conectados por cable para tareas de inspección y mantenimiento PDC2022-133643-C21)– |

| The RATEC project will focus on the industrialization of a robotic prototype for long-term inspection and maintenance applications, especially in environments without GPS coverage such as tunnels, sewage systems or underground galleries, greenhouses, large logistics warehouses and search and rescue situations. in natural disaster scenarios. The prototype will be made up of a ground robot with the batteries, sensors and computing required to carry out its work autonomously, and an aerial robot connected by cable to the equipped ground system. This air-ground connection enables the aerial platform to be powered, offering very long flight times (depending on the ground robot’s battery) and partial outsourcing of the aerial robot’s computing, reducing its payload limitations and thus improving its capabilities. of today’s aerial robots. Likewise, the complete system offers mobility far superior to systems based on fixed cables. The project’s research teams have a long history of developing technologies that enable autonomous navigation of air and ground vehicles in environments without GPS. This experience has shown that the flight times of current aerial robots are, in general, below what is needed to carry out realistic inspection or monitoring missions. These reasons motivated the start of a line of research four years ago focused on the autonomous navigation of aerial robots connected by power cable to ground robots, through the COMCISE National Plan project. As a result of it, and thanks to synergies with companies (nationally and internationally), the project teams have managed to integrate a TRL4 prototype of the system and most of the necessary functionalities. Thus, the objective of the RATEC project is to continue with the development of said prototype to achieve a proof-of-concept system with a TRL7 technological level. To this end, the project will carry out the industrialization of its basic components such as environment mapping, robot localization, robot control and navigation, and perception system. In addition, experiments will be carried out in significant real environments, such as tunnels and underground galleries that allow the prototype to be validated under the same circumstances as a fully industrialized prototype. Finally, the prototype will be validated in a real industrial operating environment.

|

|

|

NORDIC (NOvel Robotic technologies for the DIgitalisation of the Construction sector. TED2021-132476B-I00)– |

| The construction sector is a key pillar of the European Union (EU) economy, accounting for 18 million jobs and contributing to almost 9% of the GDP. More than its economic weight, the sector has a major social, environmental and climate impact, including to the quality of life of EU citizens or CO2 emissions and waste. Spain plays an important role in the EU construction sector, providing a 11% of the EU total jobs (2.1 millions) in the sector, and involving 723.507 enterprises with a total turnover of the broad sector of 239.4 billions in 2019. While the construction sector is a key driver of the EU and Spanish economies, it faces several challenges relating to, inter alia, labour shortages, competitiveness, resource and energy efficiency and productivity. Digital technologies and their integration in the construction sector are often viewed as a key element that can help tackle some of these challenges. However, the construction sector is one of the least digitalised sectors in the economy. Among the key digital technologies recently identified by the “European Construction Sector Observatory” for the digitalisation of the construction arena, robotics, drones and Building Information Modelling (BIM) stand as the most prominent and traversal technologies affecting to the four basic pillars of the construction sector: Design & Engineering, Construction, Operation & Maintenance, and Renovation & Demolition. NORDIC will focus on these digitalisation technologies to maximise its impact. And while the deployment of autonomous robots and, mostly, drones in construction has grown significantly in the last decade, there are still many construction-related tasks that cannot be tackled using nowadays robotic technologies. Thus, the main goal of NORDIC is to advance the state of the art of the technologies required for the safe operation of teams of robots, comprising UAVs and UGVs, in construction sites. The project will develop new techniques for localisation in such scenarios considering BIM models; and safe navigation in these complex environments with clutter, explicitly considering the presence of human in the robot surroundings. To this end, the following global objectives will be pursued: O1 – Reliable perception and SLAM for automation in construction: Within this objective, we will develop robust robot localisation methods able to work in construction scenarios. Furthermore, reliable semantic perception of hazards, people or construction items will be also developed and integrated into BIM models. O2 – Robust and safe navigation of robots in dynamic construction sites: This objective deals with the development of safe autonomous robot navigation system in a 3D clutter environment to enable complex missions. The approaches will consider BIM models as complement to better perform robot planning. People will be explicitly integrated into the navigation approaches, building a human-aware navigation. O3 – Demonstrate robotic functionalities for construction: The project will show a proof of concept in realistic scenarios of two main functionalities: autonomous updating of BIM using ground and aerial vehicles and semantic mapping; and monitoring of hazardous situations and workers’ personal protective equipment (PPE) for occupational safety using drones

|

|

|

INSERTION: Inspection and maiNtenance in harSh EnviRonments by mulTI-robot cooperatiONSubproject: Robust Localization, Mapping and Planning in Harsh Environments (PID2021-127648OB-C31) |

Inspection and Maintenance (I&M) robotics is a growing application area with great potential social and economical impact, in particular when considering hazardous and dangerous environments. Bringing robot automation to these environments will help to significantly reduce the risks associated with the operation, and to improve the working conditions. Indeed, there exist numerous I&M tasks that are performed periodically in both industry and service sectors that expose workers to serious risks, or that are tedious and developed in harsh conditions: sewers, off-shore Oil&Gas platforms, wind-turbines, gas/power transportation tunnels, mines, or industrial storage tanks are some good examples among many others.While the deployment of autonomous robots for I&M has evolved significantly in the last decade, most of the advances have taken place in scenarios where robot localization can be estimated precisely, scenarios with well-known characteristics and geometry in which also robot autonomous operation is facilitated. However, there are many I&M tasks in several industry sectors that cannot be tackled using nowadays robotic technologies due to the harsh environmental conditions involved. A harsh environment can be defined as a scenario that is challenging for agents to operate in. These include environments that are remote, unknown, cluttered, dynamic, unstructured and limited in visibility. This project focuses on application areas like off-shore platforms and windmills, large cargo ships, or construction sites. These applications are specially challenging due to their environment conditions, including fog, dust, rain, varying levels of illumination and/or insufficient texture, rough terrains, wind gusts, turbulence, and high degrees of clutter, like hanging cables, moving obstacles, human workers. In addition to dealing with harsh environments from the robot perception and control points of view, automatizing I&M tasks in these kinds of application scenarios would require a heterogeneous team of robots to cope with such complex and diverse environments: UGVs for floor-level tasks; UAVs for vertical, under-platform and facade inspection tasks; and USVs for sea-level inspection and team support. Furthermore, some tasks will require tight cooperation among robots like UAV taking-off and landing from USVs, or tethered UAVs to UGV/USV to perform long-term UAV I&M tasks. Thus, the main goal of the project is to advance the state of the art of the technologies required for the safe operation of teams of robots, comprising UAVs, UGVs and USVs, for I&M in harsh environments. The project will develop new techniques for localization in such low-visibility scenarios; navigation in these complex environments with clutter; manipulation/intervention; cooperation between UAVs, UGV, USVs; and control strategies for safe operation of UAVs and USVs in complex environments.To this end, the project will coordinate three sub-projects and will exploit the synergies between the expertise on robot localization, mapping and planning of the Service Robotics Laboratory (SRL) of the Universidad Pablo de Olavide (UPO, coordinator); the expertise on UAV control and perception techniques of the Computer Vision and Aerial Robotics group (CVAR) of the Universidad Politécnica de Madrid (UPM); and the expertise on USV and robot coordination of the “Ingeniería de Sistemas, Control, Automatización y Robótica” (ISCAR) group of the Universidad Complutense de Madrid (UCM).

|

|

|

RETMUR: Red española de tecnologías Multi-Robot |

The fundamental objective of RETMUR is to promote the development and research in Multi-Robot technologies in Spain. Although the Spanish scientific community is well positioned in robotics research, with research groups competitive at the international level, a thematic network in this area is pertinent, since the development of Multi-Robot systems presents additional challenges for science and engineering . The development of research in Multi-Robot technologies is complicated by the associated technical, material and experimental difficulties. It is necessary to face the material cost of many robotic platforms, and their maintenance. In many cases, it is necessary to rent dedicated infrastructure to carry out the experimentation. Finally, working with Multi-Robot systems usually requires a large number of personnel for experimentation to guarantee safety. That is why research in this area can clearly benefit from collaboration between teams. The RETMUR network, therefore, will contribute to improving the results of the research carried out in projects on this subject, joining the efforts of different experienced national research groups that will alleviate the difficulties associated with the development of Multi-Robot systems. The team involved in RETMUR integrates research groups with experience in the different domains (air, land and sea), as well as the fundamental areas of Multi-Robot systems: perception, location, planning, coordination, communication and control. RETMUR, in short, aims to promote the visibility of the research carried out in Spain in this field, as well as to strengthen existing synergies to improve the opportunities for the Spanish robotics community to carry out research on this subject

|

|

|

NHoA: Never Home Alone |

| The long-term vision of the Never Home Alone (NHoA) project is the development of a robotic system that helps elderly people to live independently in their homes and prevents loneliness and isolation. Loneliness is a direct consequence of this demographic shift and negatively affects the well-being and mental health of the elderly, leading to an increasing incidence of depression and social exclusion. Inspired by the scientific experience of caregivers, we aim to develop robots that mitigate loneliness by encouraging the contact within a related network, emulating the group therapies. NHoA brings adequate multi-disciplinary expertise to tackle this challenge. The consortium combines partners with expertise in digital health and elder care together with scientists involved in social, affective, and human-aware robotics, and two companies with business and research experience in the use of robots for social and medical care. The developments will be rooted on an i) iterative co-design involving the healthcare partners. Then, we will push the state of the art on key robot capabilities that are still in low readiness levels. In particular, we hypothesize that social intelligence is the key to the success on robustness and adaptability. Therefore, the project objectives aim to produce ii) novel multi-modal emotion recognition methods for an affective interaction; iii) robust contextual and semantic scene understanding in homes and iv) adaptive, legible, and emotionally expressive behaviours, considering both tabletop and mobile robots. The robot system will incorporate a v) novel cloud-based health monitoring and behaviour change recognition that interacts with the healthcare professionals. All these elements will be integrated by vi) a socially situated decision-making component to turn the robots into embodied social actors that will sense the social and emotional environment and proactively intervene to build an affective relationship with the user.

|

|

|

Research and Development for a Social Tabletop Robot |

In the project, we are contributing to the development of the ROS-based supporting software architecture for a social tabletop robot. This architecture integrates robot drivers and sensor interfacing, motion control and high level decision making, multi-modal perception for social interaction, dialog and non-verbal communication and memory for long-term interaction. As part of the project, we are also carrying out research on social motion planning and control, including motion generation for robot expressivity, transferring motion from animation, social robot decision making and the application of the robot to different scenarios.

|

|

|

DeepBot: Novel Perception and Navigation Techniques for Service Robots based on Deep Learning |

| The last decade has seen unprecedented advances in the development of machine learning techniques with the advent of so-called deep networks. The enormous potential of deep learning has not gone unnoticed in the field of robotics and, thus, it has recently been successfully applied, especially for tasks of perception of the environment. However, deep learning is not exempt from problems in its application to robotics: the learning process requires large amounts of data and/or interactions to be able to converge to generalizable solutions, which in many cases is not feasible to obtain. Furthermore, in most cases, the available data does not consider the environments and conditions of robotic systems. Additionally, it is necessary that the approximations consider both dynamic and computational restrictions to which robotic systems are subject. In this sense, there are two promising lines that allow addressing these problems. On the one hand, the use of self-supervised approaches allows to significantly reduce the labeling work. On the other hand, the approach of new differentiable layers based on models, and capable of carrying out analytical operations known a priori, also allows reducing the requirements of labeled data and the training time due to the reduction of trainable parameters, as well as considering explicitly system constraints. The DeepBot project is moving in this direction. The project pursues the idea of obtaining mixed solutions that combine the best of the classic techniques of location, planning and perception of people, with the latest advances in deep learning to create solutions that go beyond the current state of the art in terms of precision, speed or generality, thus giving rise to new possible applications for service robots..

|

|

|

TELEPoRTA: Machine Learning Techniques for Assistive Telepresence Robots |

The project focuses in the development of new technologies for telepresence robots based on machine learning, with special emphasis on assistive robotics applications. The main objective is to develop a semi-autonomous telepresence robot that allows a human-aware and natural interaction with people, as well as developing methods that allow the interaction through telepresence by cognitively impaired people through methods for automatic scene description and image captioning. In this way, the project considers the following subgoals: 1) New machine learning techniques for social robot navigation; 2) New cognitive computing techniques for perception of social robots; 3) Development of new semi-autonomous capabilities for telepresence assistive robots.

|

|

|

Collaboration with the Joint Research Centre, EU Commission, HUMAINT project |

The HUMAINT project aims to provide a multidisciplinary understanding of the state of the art and future evolution of machine intelligence and its potential impact on human behaviour, with a focus on cognitive and socio-emotional capabilities and decision making. The project has three main goals: a) Advance the scientific understanding of machine and human intelligence; b) study the impact of algorithms on humans, focusing on cognitive and socio-emotional development and decision making; c) provide insights to policy makers with respect to the previous issues.Our group collaborate with the core team in HUMAINT regarding robotics-related technologies, and in studies on child-robot interaction.

|

|

|

COMplex Coordinated Inspection and SEcurity missions by uavs in cooperation with ugv (COMCISE) |

| The COMCISE project proposes a smart multi-robot system for inspection in GPS-denied areas composed by a ground robot with enough computation and batteries, and a detachable tethered flying robot equipped with sensors for inspection and navigation. The main hypothesis is that such system offers the main benefits of both platforms: long inspection cycles and high manoeuvrability. UPO’s role will be centered on the cooperative planning, perception and navigation in the coordinated UAV-UGV system. The specific objectives are:Precise multi-robot cooperative estimation, localization and mapping. The objective is to localize the UGV+UAV system in GPS-denied areas based on the local sensors installed in both vehicles. The approaches will take advantage of the synergies of both systems and also chance to relative estimate the position of one robot with respect the other. Multi-robot motion planning and control for tethered and untethered configurations. the objective is to develop new methods for motion planning and reactive collision avoidance of the whole UGV-UAV, taking into account the restrictions induced by the tether, communications, sensor payloads, etc. Cooperative planning and perception for efficient inspection applications: the objective is to develop new active sensing techniques for multi-robot systems for perception tasks, able to handle uncertainties and constraints and to improve the perception results.

|

|

|

NIx: ATEX Certifiable Navigation Module for Ground Robotic Inspection (ESMERA) |

The NIx project proposes an ATEX certifiable navigation module for ground robotic inspection. The navigation module will be developed so that it can be easily integrated into different robot platforms. NIx development will be divided in two stages. Phase I will focus in the implementation and validation in real experiments of the whole localization and navigation stack. Phase II will focus in the industrialization of the localization and navigation system according with the ATEX certification requirements and on the usability of the system.

|

|

|

MBZIRC: Mohamed Bin Zayed International Robotics Challenge |

Our lab will participate in the competition as part of the CVAR-UPM/ SRLab-UPO/ UAVRG-PUT team, with our colleagues from the Universidad Politecnica de Madrid and Poznan University of Technology.

|

|

|

ARCO: Autonomous Robot CO-worker (HORSE) |

| The objective of this project is the development and integration of a human-robot co-working system for warehouse picking applied to production line feeding. The proposed system will bring out the best in everyone; the extreme flexibility and adaptation of humans, and the safety and control of ground robots. This technology is particularly interesting for small and medium factories where fully robotized warehouses are not affordable or overkill, at the time that it allows optimizing the picking task with respect to manual operation. The project is based on two main pillars to reach its objective: i) robust person detection and tracking for safe robot navigation: this new HORSE software component for detection and tracking of workers in the factory will allow the integration of ultra-wide-band localization systems, image-based people detection and LIDAR- based people detection to build a resilient software that exploits the synergies between different sensor modalities; ii) increase of AGV autonomy to perform autonomous navigation with people awareness in dynamic/changing scenarios.

|

|

|

SIAR: Sewer Inspection Autonomous Robot (ECHORD++) |

| The SIAR project will develop a fully autonomous ground robot able to autonomously navigate and inspect the sewage system with a minimal human intervention, and with the possibility of manually controlling the vehicle or the sensor payload when required. The project uses as starting point IDMind’s robot platform RaposaNG. A new robot will be built based on this know-how, with the following key steps beyond the state of the art required to properly address the challenge: a robust IP67 robot frame designed to work in the hardest environmental conditions with increased power autonomy and flexible inspection capabilities; robust and increased communication capabilities; onboard autonomous navigation and inspection capabilities; usability and cost effectiveness of the developed solution. UPO leads the navigation tasks on the project

|

|

|

TERESA: Telepresence Reinforcement-Learning Social Agent |

| TERESA aims to develop a telepresence robot of unprecedented social intelligence, thereby helping to pave the way for the deployment of robots in settings such as homes, schools, and hospitals that require substantial human interaction. In telepresence systems, a human controller remotely interacts with people by guiding a remotely located robot, allowing the controller to be more physically present than with standard teleconferencing. We will develop a new telepresence system that frees the controller from low-level decisions regarding navigation and body pose in social settings. Instead, TERESA will have the social intelligence to perform these functions automatically.The project’s main result will be a new partially autonomous telepresence system with the capacity to make socially intelligent low-level decisions for the controller. Sometimes this requires mimicking the human controller (e.g., nodding the head) by translating human behavior to a form suitable for a robot. Other times, it requires generating novel behaviors (e.g., turning to look at the speaker) expected of a mobile robot but not exhibited by a stationary controller. TERESA will semi-autonomously navigate among groups, maintain face-to-face contact during conversations, and display appropriate body-pose behavior.Achieving these goals requires advancing the state of the art in cognitive robotic systems. The project will not only generate new insights into socially normative robot behavior, it will produce new algorithms for interpreting social behavior, navigating in human-inhabited environments, and controlling body poses in a socially intelligent way.The project culminates in the deployment of TERESA in an elderly day center. Because such day centers are a primary social outlet, many people become isolated when they cannot travel to them, e.g., due to illness. TERESA’s socially intelligent telepresence capabilities will enable them to continue social participation remotely.

UPO leads WP4 within TERESA, “Socially Navigating the Environment”, that deals with the development of the navigation stack (including localization, path planning and execution) required to enable enhanced, socially normative, autonomous navigation of the telepresence robot.

|

|

|

OCELLIMAV: New sensing and navigation systems based on Drosophilas OCELLI for Micro Aerial Vehicles |

Micro unmanned Aerial Vehicles (MAVs) may open up a new plethora of applications for aerial robotics, both in indoor and outdoors scenarios. However, the limited payload of these vehicles limits the sensors and processing power that can be carried by MAVs, and, thus, the level of autonomy they can achieve without relying on external sensing and processing. Flying insects, like Drosophila, on the other hand, can carry out impressive maneuvers with a relatively small neural system. This project will explore the biological fundamentals of Drosophilas ocelli sensory-motor system, one of the mechanisms most likely related to fly stabilization, and the possibility to derive new sensing and navigation systems for MAVs from it.The project will do it by a complete reverse engineering of the ocelli system, estimating the structure and functionality of its neural processing network, and then modeling it through the interaction of biological and engineering research. Novel genetic-based neural tracing methods will be employed to extract the topology of the neural network, and behavioral experiments will be devised to determine the functionalities of specific neurons. The findings will be used to derive a model, and this model will be used to characterize the relevant aspects from the point of view of estimation and control. The model will also serve to determine the adaptation of this sensory-motor system to current MAV platforms, and to design of a proof of concept sensing and navigation system.The expected results of the project are two-fold: to corroborate or challenge current assumptions on the use of ocelli in flies; and the creation of a proof of concept device with the findings of the project for a MAV system.

|

|

|

PAIS-MultiRobot: Perception and Action under Uncertainties in Multi-Robot Systems |

The project main objective is the development of efficient methods for dealing with uncertainties in robotic systems, and, in particular, teams of multiple robots.One of the objectives is the development of an scalable cooperative perception system able to reason on the uncertainties associated to the sensing system in the multi-robot platform. The efficient application of online Partially Observable Markov Decision Processes (POMDPs) to this problem will be analyzed.In the project, we aim to demonstrate the in real robotic systems for applications like surveillance.

|

|

|

EC-SAFEMOBIL: Estimation and control for safe wireless high mobility cooperative industrial systems |

Autonomous systems and unmanned aerial vehicles (UAVs), can play an important role in many applications including disaster management, and the monitoring and measurement of events, such as the volcano ash cloud of April 2010. Currently, many missions cannot be accomplished or involve a high level of risk for the people involved (pilots and drivers), as unmanned vehicles are not available or not permitted. This also applies to search and rescue missions, particularly in stormy conditions, where pilots need to risk their lives. These missions could be performed or facilitated by using autonomous helicopters with accurate positioning and the ability to land on mobile platforms such as ship decks. These applications strongly depend on the UAV reliability to react in a predictable and controllable manner in spite of perturbations, such as wind gusts. On the other hand, the cooperation, coordination and traffic control of many mobile entities are relevant issues for applications such as automation of industrial warehousing, surveillance by using aerial and ground vehicles, and transportation systems. EC-SAFEMOBIL is devoted to the development of sufficiently accurate common motion estimation and control methods and technologies in order to reach levels of reliability and safety to facilitate unmanned vehicle deployment in a broad range of applications. It also includes the development of a secure architecture and the middleware to support the implementation. Two different kind of applications are included in the project:

UPO’s team is involved through a subcontracting, dealing with the development of multi-vehicle planning under uncertainties and decentralized data fusion algorithms, leaded by Luis Merino. In addition, we developed novel strategies for automatic control of rotary-wing helicopters in landing operations. This work was leaded by Manuel Béjar.

|

|

|

FROG: Fun Robotic Outdoor Guide |

FROG proposes to develop a guide robot with a winning personality and behaviours that will engage tourists in a fun exploration of outdoor attractions. The work encompasses innovation in the areas of vision-based detection, robotics design and navigation, human-robot interaction, affective computing, intelligent agent architecture and dependable autonomous outdoor robot operation.The FROG robots fun personality and social visitor-guide behaviours aim to enhance the user experience. FROGs behaviours will be designed based on the findings of systematic social behavioural studies of human interaction with robots. FROG adapts its behaviour to the users through vision-based detection of human engagement and interest. Interactive augmented reality overlay capabilities in the body of the robot will enhance the visitors experience and increase knowledge transfer as information is offered through multi-sensory interaction. Gesture detection capabilities further allow the visitors to manipulate the augmented reality interface to explore specific interests.The is a unique project in respect to Human Robot Interaction in that it considers the development of a robot’s personality and behaviours to engage the users and optimise the user experience. We will design and develop those robot behaviour’s that complement a guide robot’s personality so that users experience and engage with the robot truly as a guide.We lead WP2 within FROG, dealing with robot localization and social navigation. We are developing algorithms for robust localization and navigation in outdoors scenarios. Furthermore, we are working on models and tools for social navigation.

|

|

|

CONET: Cooperating Objects Network of Excelence |

The vision of Cooperating Objects is relatively new and needs to be understood in more detail and extended with inputs from the relevant individual communities that compose it. This will enable us to better understand the impact on the research landscape and to steer the available resources in a meaningful way.The main goal of CONET is to build a strong community in the area of Cooperating Objects capable of conducting the needed research to achieve the vision of Mark Weiser.Therefore, the CONET Project Objectives are the following:

Within this project, we are working on the research cluster on Mobility of Cooperating Objects, mainly in the tasks of multi-robot planning under uncertainty and aerial objects coordination and control.

|

|

|

URUS: Ubiquitous networking Robotics in Urban Settings |

| The URUS project focussed in designing a network of robots that in a cooperative way interact with human beings and the environment for tasks of assistance, transportation of goods, and surveillance in urban areas. Specifically, the objective of the project was to design and develop a cognitive network robot architecture that integrates cooperating urban robots, intelligent sensors, intelligent devices and communications.Among the specific technology that has been developed in the project, it can be found: navigation coordination; cooperative perception; cooperative map building; task negotiation; human robot interaction; and wireless communication strategies between users (mobile phones), the environment (cameras), and the robots. Moreover, in order to make easy the tasks in the urban environment, commercial platforms that have been specifically designed to navigate and assist humans in such urban settings will be given autonomous mobility capabilities.Proof-of concept tests of the systems developed took place in the UPC campus, a car free area of Barcelona.The participation of the UPO in the project was two-fold. In one hand, we developed the navigation algorithms for our robot Romeo, to allow it to navigate safely in a pedestrian environment.

On the other hand, we participated in the development of a decentralized fusion system for collaborative person tracking and estimation using all the elements of the system (robots, camera network and sensor network).

|

|

|

COMETS: Real-Time Coordination and Control of Multiple Heterogeneous Unmanned Aerial Vehicles |

The main objective of COMETS is to design and implement a distributed control system for cooperative activities using heterogeneous Unmanned Aerial Vehicles (UAVs). Particularly, both helicopters and airships are included.Technologies involved in COMETS Project:

A key aspect in this project is the experimentation: local UAV experiments and general multi-UAV demonstrations. Fernando Caballero and Luis Merino were directly involved in the project.

|

|